10.06.2024 | Michael Veser

How Data is Stored for use with AI Models

This article explains the technical basics of generative AI systems. It is intended to provide a better understanding of our articles on security issues relating to the use of AI systems.

In the world of artificial intelligence, large language models (LLMs) are playing an increasingly central role in the processing and analysis of human language. In order to use these complex models efficiently with own data, large amounts of text must be converted into a form that can be processed by AI models. This process involves two crucial steps: tokenization and embedding. In this article, we will explore how these techniques are used to prepare and store data for use by LLMs. I will explain the technical mechanisms of these processes, illustrate their importance in the context of machine language processing, and show how they form the basis for the advanced capabilities of language models. By understanding these fundamental technologies, we can better understand how LLMs handle complex analysis tasks and gain deeper insights into the underlying technology. Using a typical use case, the analysis of log data, we will take a closer look at the steps involved.

Classic approach: Parsing

Parsing unstructured data plays a central role in analyzing log files. This process transforms the incoming data stream into a structured form by using regular expressions, format recognition and, in some cases, machine learning. Specific information such as timestamps, IP addresses and server names are extracted from the data. Once the data is structured, further analyses such as frequency evaluations, anomaly detection and sorting according to specific parameters can be carried out efficiently.

One of the biggest advantages of this approach is the powerful search function, which makes it possible to quickly access certain known parameters. However, there are also challenges: The initial effort required to set up the parsing system is often considerable. In addition, the possibilities for recognizing similarities between different data sets are limited. This is because parsing is primarily geared towards identifying explicit information rather than analyzing hidden patterns or relationships between data points. Due to variances in the input data, the susceptibility to errors can also quickly become a limiting factor.

Tokenization: Dealing with unstructured information

In contrast to parsing, which focuses on recognizing specific content in a consistent form, tokenization divides the input text into smaller units - so-called tokens - without taking the specific content into account. This process can be done in different ways: Some approaches use predefined lengths to segment the text, while others rely on separators such as spaces or punctuation marks to identify natural boundaries within the text.

In addition, there are methods that divide the text into larger sections or “chunks”. These chunks can comprise whole sentences or parts of sentences and are used to divide the text into logically coherent units that can then be further analyzed.

In the next step, we will take a closer look at the different tokenization approaches and discuss both their advantages and disadvantages in detail. This overview will allow us to develop a deeper understanding of how these methods affect text analysis and which techniques are best suited for specific applications.

Punctuation-based tokenization

In punctuation-based tokenization, the process does not usually divide the text into fixed-length tokens, although there may be a predefined maximum length. Rather, this type of tokenization separates the text sequence at each punctuation or space character, which usually results in short tokens. A special sub-model of this method is “whole sentence tokenization”, in which an entire sentence is treated as a single token. This allows the text to be processed in larger, coherent units that can better preserve context.

Space-based tokenization

In “whitespace tokenization”, the method uses spaces as separators to divide the text into individual tokens. When analyzing log files, this method produces a result that is very similar to the structures generated by parsing methods. By using spaces as clear delimiters between the tokens, the text can be effectively divided into discrete, easily manageable units. This type of tokenization is particularly useful for processing clear, space-separated data such as log entries.

Tokenization based on fixed length definition

With fixed-length tokenization, the text is divided into tokens of equal size regardless of spaces or punctuation marks. This method systematically divides the text sequence into units of a specific length without taking into account natural language boundaries such as words or sentences. This type of tokenization can be particularly useful in scenarios where uniform processing of the data is desired and the position of each character in the text could be significant.

Conclusion

The information content of the data can depend heavily on the tokenization method chosen. Especially when embedding the tokens, both too short and too long tokens can lead to a loss of information. It is therefore crucial to choose the tokenization method carefully to ensure that the relevant data features are effectively captured and preserved.

Embedding in the vector space

Following the decomposition of the input data stream, each token is assigned a unique vocabulary ID. These IDs serve as numerical representatives of the tokens, each representing a word, punctuation mark or other linguistic unit. The underlying vocabulary, which is developed during the pre-training of the model, typically includes an extensive set of entries that represent the recognized words and symbols.

The assignment of these vocabulary IDs standardizes and greatly simplifies the processing of text data. By reducing each token to a number, the models can operate independently of the original language or formatting of the texts, speeding up the processing and reducing memory requirements. In addition, these numerical representations allow the model to recognize relationships between the data and make machine learning processes more efficient. Such processes rely heavily on numerical operations and the clear numerical mapping through vocabulary IDs facilitates the integration of linguistic information into mathematical models.

Once assigned an ID, the tokens can move on to the next phase of language processing: embedding. In this phase, the tokens, which are now available as numerical IDs, are converted into multidimensional vectors. These vectors, also known as embeddings, represent the semantic and syntactic properties of the tokens in a continuous vector space. They enable the model to recognize and learn deep linguistic patterns, which forms the basis for complex tasks such as translation, text summarization and question answering.

How embedding models work

Embedding models are crucial for effectively embedding content in a multidimensional vector space by converting the semantic and syntactic properties of textual data into numerical vectors. These models analyze the context in which words and phrases occur, including their frequency and relationships to other words in the text. Through this analysis, embedding models generate dense vector representations, which are then positioned in vector space so that similar or related terms are close to each other. This makes it possible to measure and map the semantic proximity between different words or phrases. In addition to the position in the vector space, the “direction of view” within the space also plays a major role. For example, the term “mother” in relation to “father” would lie in a similar viewing direction to “woman” in relation to “man”.

Another important aspect of embedding models is their ability to be adapted through fine-tuning. This means that an already pre-trained model can be further trained with more specific data to increase the accuracy of the embedding, especially when it comes to specialized terminology or specific linguistic contexts. Fine-tuning allows models to be fine-tuned to specific requirements and needs, which can improve the effectiveness of the model in specialized applications.

This ability to translate text into precise, contextually relevant vectors makes embedding models a powerful tool in the world of machine language processing. They allow us to gain deeper and more nuanced insights into the meanings behind words and their relationships, making an indispensable contribution to the development of intelligent, context-aware systems. This functionality lays the foundation for similarity search.

Similarity search

Having understood tokenization and the embedding of data in a multidimensional space, we can turn our attention to the question of how this embedded data can be used to identify similarities between different log entries, for example. This is where algorithms such as cosine similarity and k-nearest neighbors (kNN) come into play.

Cosine similarity measures the cosine of the angle between two vectors in vector space. This metric is particularly useful because it is independent of the size of the vectors, which means that only the direction of the vectors and not their length is taken into account. The value range of this metric is between -1 and 1, with 1 indicating a perfect match (same orientation), 0 indicating no correlation and -1 indicating exactly opposite directions. The advantages of cosine similarity are its low complexity and fast implementation, which makes it ideal for systems that need to perform fast similarity searches. However, it is important to set appropriate thresholds for the similarity search to find an optimal balance between including irrelevant results and overlooking important results.

As an alternative or complement to cosine similarity, the k-nearest neighbors (kNN) algorithm provides a method for classifying data points based on the closest already classified points around them. kNN is an intuitive approach: a new point is assigned to the classes of its nearest neighbors, where “k” indicates the number of neighbors to be considered. With a k of 1, the point is simply assigned to the class of its nearest neighbor. With a larger k, the most frequent classes within the nearest k points are taken into account. It is recommended to choose an odd number for k in order to have a clear majority when assigning classes. However, a major drawback of kNN is its scalability, especially for large datasets, as the distance to all other points must be precalculated. To overcome the challenges of hardware efficiency, adapted versions such as “Approximate nearest neighbor” exist, which enable a faster, albeit slightly less accurate, neighborhood search.

In summary, both cosine similarity and kNN provide powerful tools for similarity search in embedded data. While cosine similarity is characterized by low complexity and fast execution, kNN provides an intuitive, albeit potentially computationally intensive, method for data classification that is particularly effective on smaller datasets or in customized implementations. Both methods are crucial for tasks such as anomaly detection and the retrieval of relevant search results by allowing the relationships between data points to be precisely determined.

Test with log files

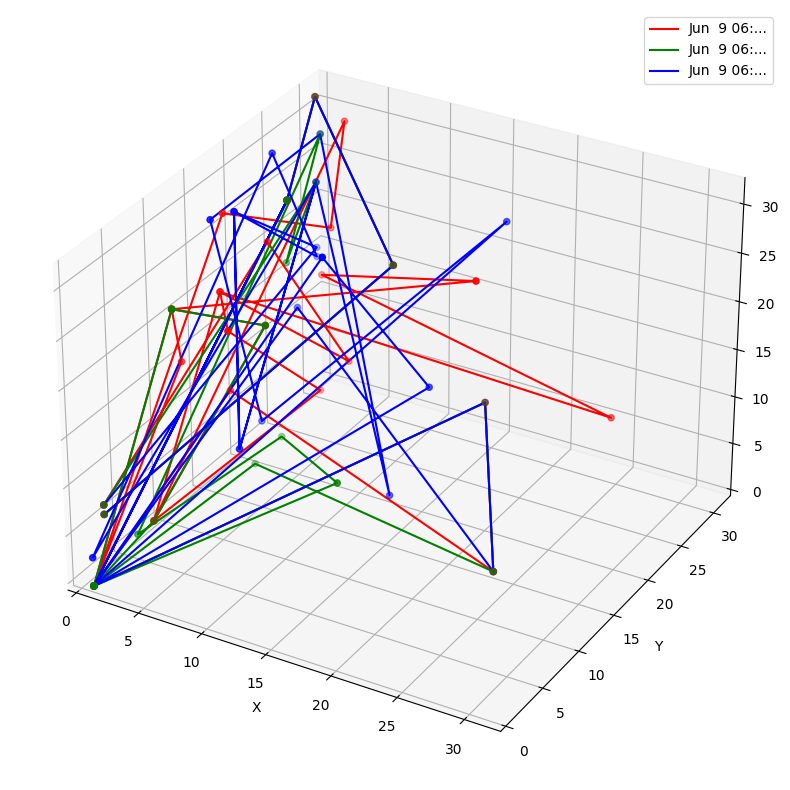

To test the whole theory with a concrete example, let’s embed three lines of a logfile into a simplified three-dimensional vector space:

Conclusion

In this article, we have explored the crucial processes of tokenization and embedding that are necessary to prepare text data for use in Large Language Models (LLMs). We have seen how various methods of tokenization break down texts into smaller, manageable units, which are then represented by embedding models in a multidimensional vector space. The ability of these models to capture the semantic and syntactic properties of the language and convert them into numerical form is fundamental for further tasks.

We have also looked at the importance of similarity searches using algorithms such as cosine similarity and k-nearest neighbors (kNN), which allow us to identify and exploit relationships between embedded data, for example for classification or anomaly detection.

By fine-tuning these models, the embeddings can be further refined to better account for specific requirements and contexts, which will become important in one of the later articles.